Getting Started¶

Resource Preparation¶

Before installing the stream data processing templates, system operator libraries, and custom operator libraries, or before developing and running stream data processing pipelines, ensure that your OU has requested for the Stream Processing resources through the EnOS Management Console > Resource Management page. The resource specification determines the performance of stream data processing pipelines.

The Stream Processing resources include the following types, which are used for developing and running pipelines separately:

- Stream Designing: Used for installing stream data processing templates, system operator libraries, and custom operator libraries, and for designing stream processing pipelines.

- Standalone Processing:Used for running stream processing pipelines by the standalone mode.

- Cluster Processing:Used for running stream processing pipelines by the cluster mode.

For more information about requesting for the Stream Processing resources, see Stream Processing Resource Specification.

When you do not need to process stream data with the Stream Processing service, you can delete and release the requested Stream Processing resources through the Resource Management page to save costs.

Installing an Operator Library or Template¶

Before developing stream processing pipelines, you need to install the corresponding operator library or data calculation template.

Prerequisites¶

- Your account has the access to the stream processing service. To acquire the access, contact the system administrator.

- Your OU has requested for the Pipeline Design resource type of the Stream Processing resources. For more information, see Resource Preparation.

Installing a Data Calculation Template¶

Log in the EnOS Management Console and click Stream Processing > Pipeline Library.

Click the Template tab to view the data calculation templates that can be installed. Currently, the following templates are available:

- Time Window Aggregation: Supporting aggregation of numeric data for a single measurement point of a single device.

- Electric Energy Cal by Meter Reading: Supporting calculation of daily electric energy by meter reading data.

- Electric Energy Cal by Instant Power: Supporting calculation of daily electric energy by instant power data.

- Electric Energy Cal by Average Power: Supporting calculation of daily electric energy by average power data.

Find the template to be installed and click Install. The system will start the installation immediately.

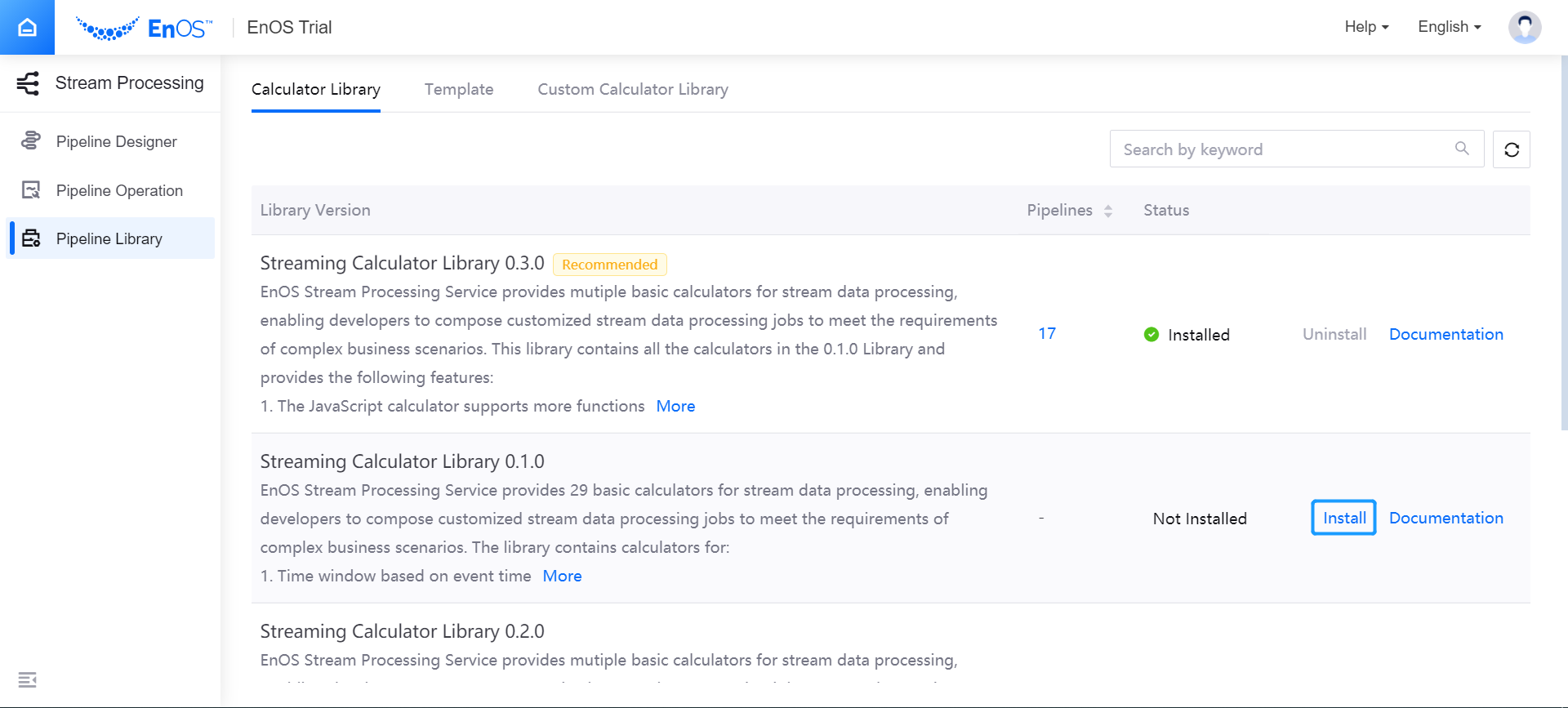

Installing a System Operator Library¶

Log in the EnOS Management Console and click Stream Processing > Pipeline Library.

Under the Calculator Library tab, view the system libraries that can be installed.

Find the library to be installed and click Install. The system will start the installation immediately.

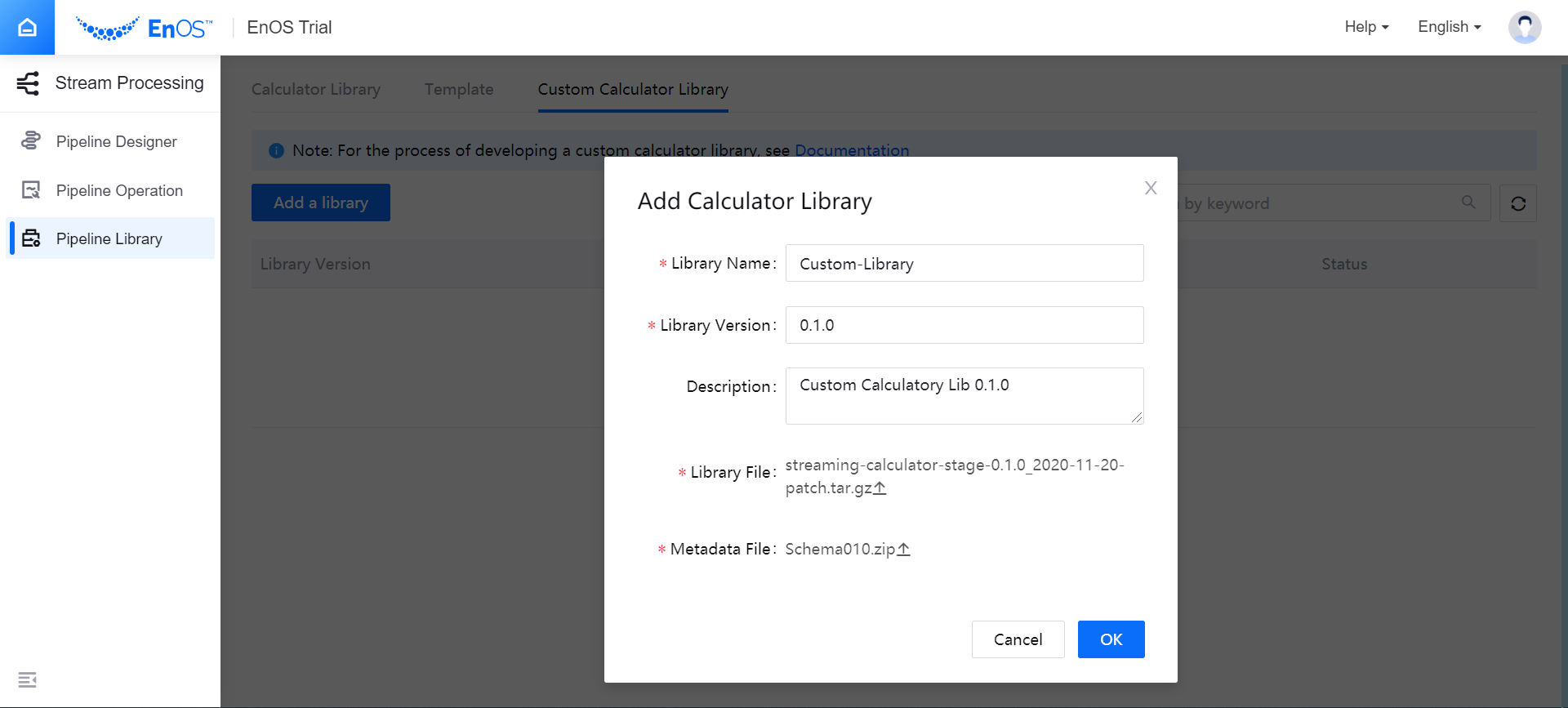

Installing a Custom Operator Library¶

Log in the EnOS Management Console and click Stream Processing > Pipeline Library.

Under the Custom Calculator Library tab, click Add a library to upload and install a custom library.

- Enter the library name, version, and description (the library name can be reused, but the library version must be newer than that of an existing library).

- Upload the library file (in .tar.gz format and must not exceed 300MB).

- Upload the metadata file (in .zip format and must not exceed 5MB).

Click OK to start uploading the custom library file and metadata file. When completed, the custom library will be displayed in the lsit of custom operator libraries, with the status of Not Installed.

Click Install. The system will start the installation immediately.

Uninstalling Templates or Operator Libraries¶

If your business does not need the installed templates, system operator libraries, or custom operator libraries, or if you want to install a newer version, you can uninstall the libraries or templates to release the Stream Designing resource.

Note

Before uninstalling a template or an operator library, ensure that no stream processing pipeline is still using the template or library.

Numeric Data Aggregation Tutorial¶

This section will help you learn how to process numeric type stream data with the Time Window Aggregation template.

Prerequisites¶

- Your account has the access to the stream processing service. To acquire the access, contact the system administrator.

- Your OU has requested for the stream processing resources. For more information, see Resource Preparation.

- You have installed the Time Window Aggregation template through the Pipeline Library page. For more information, see Installing a Data Calculation Template.

- The device is connected and is sending data to the cloud.

Procedure¶

The procedure of processing numeric type stream data with the Time Window Aggregation template is as follows.

- Design and create a stream processing pipeline using the template.

- Save and publish the stream processing pipeline.

- Configure the running resources for the stream processing pipeline.

- Start the stream processing pipeline.

- Monitor the running status and results of the job.

Goal and Data Preparation¶

Goal

The goal of this guide is to get the maximum value of the test_raw input point every 5 minutes and output the calculated value to the output point test_5min.

Data Preparation

Model configuration: The model used in this guide (testModel) is configured as follows.

| Feature Type | Name | Identifier | Point Type | Data Type |

|---|---|---|---|---|

| Measurement Point | test_raw | test_raw | AI | double |

| Measurement Point | test_5min | test_5min | AI | double |

Note

- In this example, test_raw is the input point, and test_5min is the output point.

- Ensure that both the input point and the output point are of the same type.

- Storage configuration: Configure the input point test_raw as AI raw data and the output point test_5min as minute-level normalized AI data. For more information, see Configuring TSDB Storage.

- Data ingestion: For information about data ingestion of input point test_raw, see Quick Start: Connecting a Smart Device to EnOS Cloud.

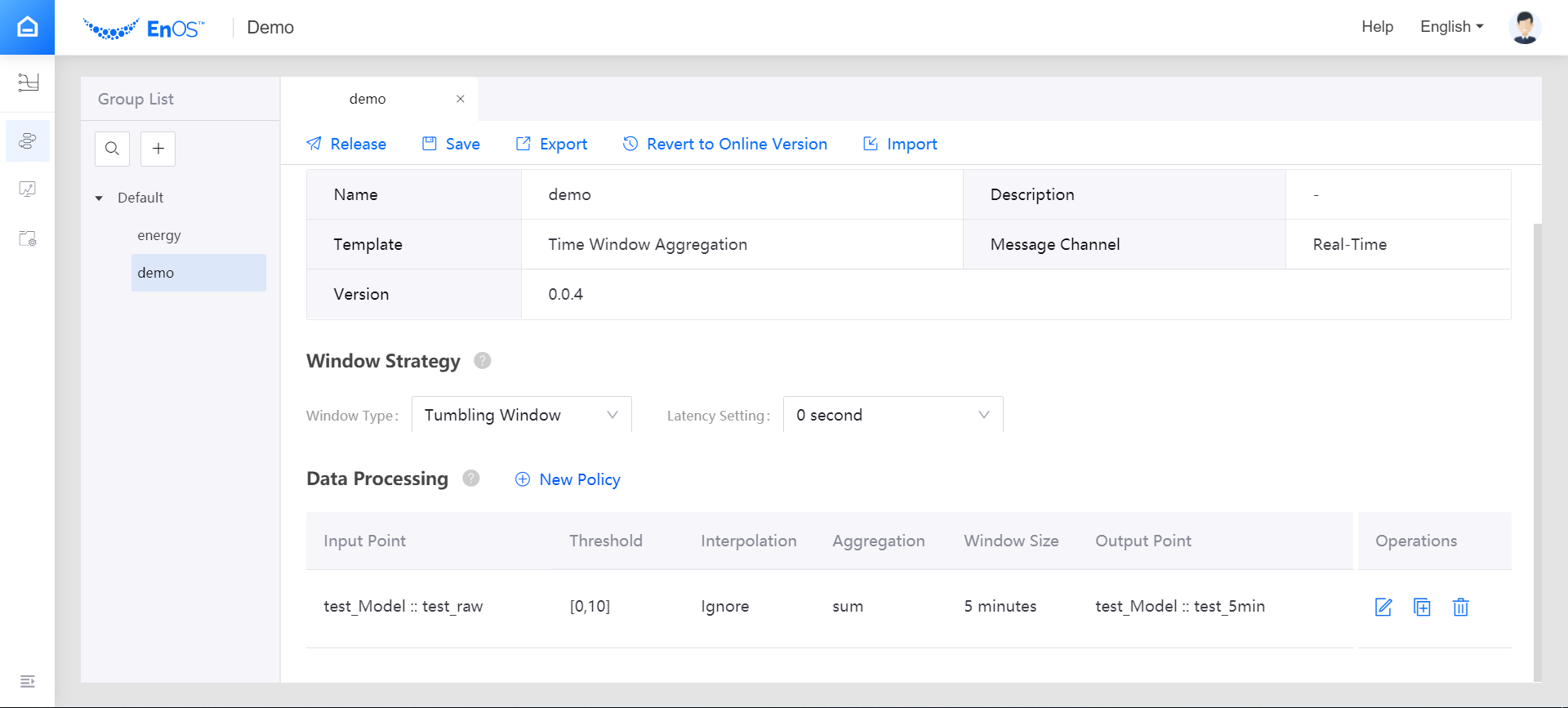

Step 1. Create a Stream Processing Pipeline¶

- Log in the EnOS Management Console and click Stream Processing > Pipeline Designer to view all the stream processing pipelines created within the organization. You can double-click a job to view and edit its configuration.

- Click the + icon above the pipeline list to create a stream processing pipeline. Select General as the pipeline type, New as the creating method, and enter the name and description of the stream data processing pipeline.

- From the Template drop down list, select the installed Time Window Aggregation template, and select the version of the template from the Version drop down list.

- Select Real-Time as the message channel.

- Configure the window strategy of the stream processing pipeline.

- Window Type: Select Tumbling Window, which has a fixed size and does not overlap.

- Latency Setting: Select the time extension for late-arriving data. If 0 second is selected, the late-arriving data will not be processed.

- Data Processing: Click New Policy to add a row of data processing policy.

- Input Point: Select the measurement point of the input data. In this example, select the test_raw point of the testModel.

- Threshold: Specify the threshold for filtering raw data before processing.

- Interpolation: Select the interpolation algorithm that is used to process the input data that exceed the threshold. Currently, the interpolation strategy only supports Ignore.

- Aggregation: Select the function to compute the valid data in the window. For this guide, choose max.

- Window Size: Select the duration value for the time window, which specifies the amount of data to be computed in a single window. For this guide, choose 5 minutes.

- Output Point: Select the point to receive the processed result. In this example, select the test_5min point.

Step 2. Save and Publish the Stream Processing Pipeline¶

When the stream processing configuration is completed, you can save and publish the data aggregation job online by clicking the Release icon. See the following example:

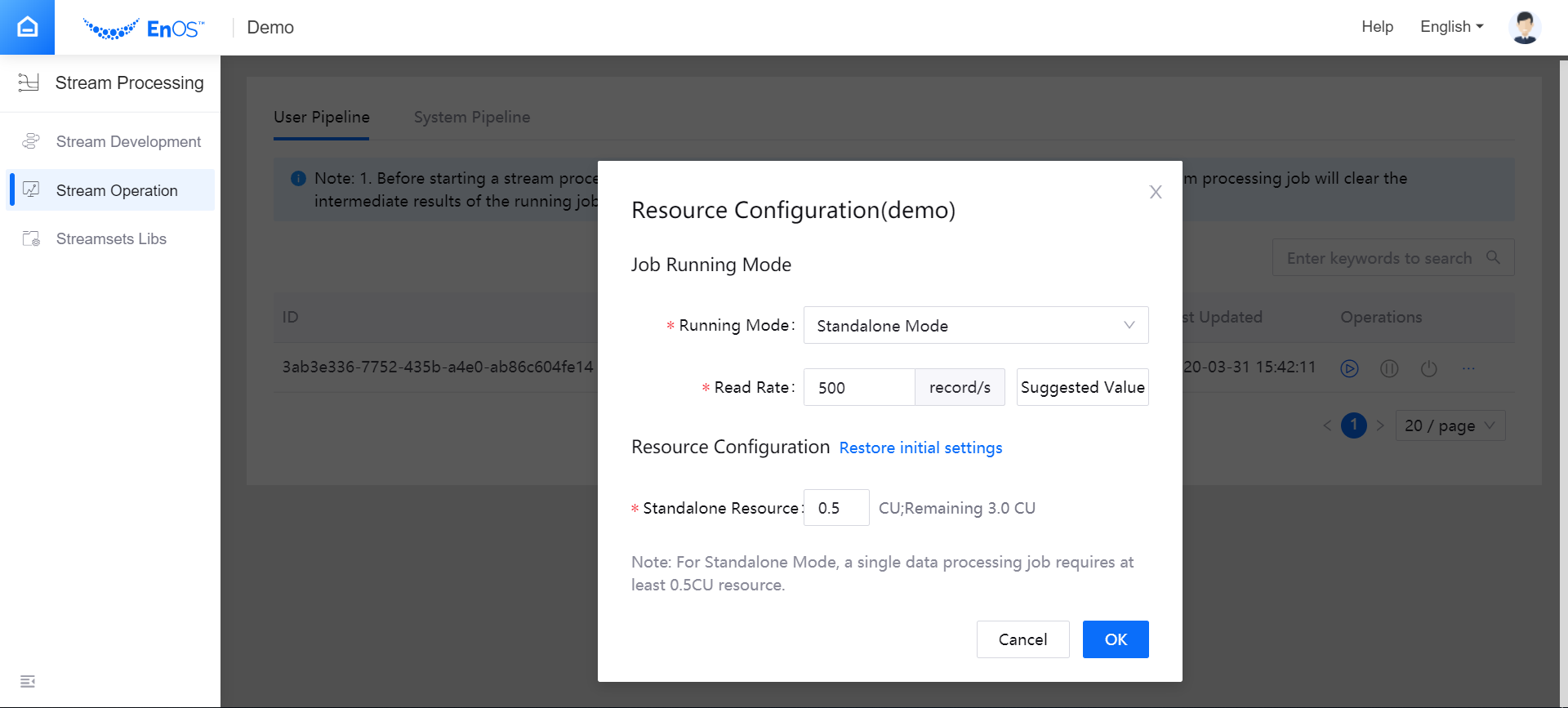

Step 3. Configure the Running Resources for the Stream Processing Pipeline¶

Open the Stream Processing > Pipeline Operation page, and view the published streaming processing job. The default status of the job is PUBLISHED. Before starting the job, you need to configure the running resource that is required by the job with the following steps.

- From the Operations column, select … > Configure Resource.

- In the pop-up window, select Standalone Mode as the running mode, enter the data reading rate, and enter the required running resource for the job (CUs).

- Click OK to save the configuration.

Note

Before configuring the running resources, ensure that your OU has requested for the required stream processing resources. For more information, see Managing Resources

Step 4. Start the Stream Processing Pipeline¶

System pipelines must be started and running before starting the stream processing pipelines. For this guide, you need to start the Data Reader RealTime system pipeline first.

- On the Pipeline Operation page, click the System Pipeline tab, and start the corresponding system pipeline for the stream processing pipeline.

- Click the User Pipeline tab, find the published stream processing pipeline, and click the Start icon

for the job in the Operations column to start running the job.

for the job in the Operations column to start running the job.

Step 5. View the Running Results of the Job¶

On the Pipeline Operation page, find the running job, and click the job name to open the Stream Details page. You can view the following information about the job.

- Summary: View the summary of the running job, such as the overall data processing records and the data aggregation records for a specific period.

- Log: Click the View Logs icon on the upper right corner to check the running log of the job.

- Results: The processed data will be stored in TSDB according to the configured storage policy. Call the corresponding API to get the stored data. For more information about data service APIs, go to EnOS Management Console > EnOS API.